Big Data Services for Real-Time, High-Volume Workloads.

Romexsoft helps tech-driven companies and enterprises process massive, time-sensitive, high-throughput datasets with cloud-built, modern software that surpasses the capabilities of traditional systems.

Big Data Services We Provide

We deliver software solutions for instant analytics, scalable data distribution, and intelligent automation. All optimized for peak performance and large-scale operations.

Our experts align each step, from big data strategy to infrastructure and analytics, with your business goals and technical needs. We help to choose the right tools and architecture to support scalable, efficient big data solutions.

Building custom software tailored for big data workloads enables efficient data collection, processing, and analysis while integrating seamlessly with existing systems and supporting specific business needs.

Unlike traditional QA, which focuses on UI or API behavior, our approach targets large-scale data pipelines, transformation logic, and distributed processing. Our testing engineers ensure accuracy, job reliability, and end-to-end data integrity across batch and streaming.

Ingesting data from various sources and transforming it through batch and stream processing enables real-time insights, faster workflows, and more efficient use of large-scale, high-volume datasets.

Turning large-scale data into interactive dashboards and visual reports allows teams to explore trends, monitor metrics, and communicate insights clearly across the organization.

Storing large volumes of structured and unstructured data in scalable environments such as data lakes and warehouses ensures secure, efficient access for processing, analysis, and long-term use.

Ensuring proper governance and security involves setting access controls, maintaining data quality, and complying with regulations to protect sensitive information and support trusted analytics.

Combining data from diverse sources and building robust pipelines ensures consistent, reliable, and scalable data flow across systems, laying the foundation for accurate analysis and reporting.

Why Choose Romexsoft as Your Big Data Services Company

As an AWS Advanced Tier Services Partner with senior certified engineers and architects on board, we build cloud-native data platforms that accelerate insight delivery and reduce infrastructure complexity.

Every engagement is shaped by your goals, timelines, and feedback, ensuring a partnership built on trust and measurable results.

Modular, well-documented, and easy-to-extend software built to reduce tech debt and support fast iteration as systems evolve.

We are able to design big data architectures that scale predictably and perform reliably in the long run. No surprises, no guesswork.

Have a Talk with Our Big Data Expert

Work with our senior engineers who build production-ready solutions: built to handle your real workload and goals.

Value Delivered Through Our Big Data Software Development

Today, companies deal with large and complex data from numerous sources. Managing and using this data efficiently requires the right tools, systems, and support. The points below explain how a big data solution can assist in that process.

Industries We Support with Big Data Services

Practical Applications of Our Big Data Solutions

We design and develop cloud-native big data software that helps clients turn complex data into actionable outcomes. Here are the use cases we most frequently deliver for data-driven businesses:

Our Big Data Implementation Process

Implementing a Big Data solution requires a structured, step-by-step approach to ensure reliability, scalability, and business value. Our process covers every stage, from defining the right strategy to operationalizing insights, so you can turn unstructured data into actionable outcomes with confidence.

The process begins with a deep dive into your business objectives, existing infrastructure, and data challenges. We work closely with stakeholders to define a tailored big data strategy, select suitable technologies, and outline a roadmap that aligns technical execution with business outcomes. This phase sets the foundation for a scalable, efficient, and value-driven data environment.

We collect data from diverse sources – internal systems, external APIs, logs, IoT devices, and more. Our team implements secure, high-throughput ingestion pipelines that support both batch and real-time data streams. This ensures that incoming data is captured reliably, with minimal latency and proper formatting, while maintaining consistent data management practices for downstream processing.

Based on your performance, scalability, and access requirements, we design a robust storage architecture. This may include centralized data repository for raw and semi-structured data, and data storages for structured, analytics-ready datasets. Our big data solutions ensure high availability, cost-efficiency, and seamless integration with processing and analytics layers.

Using state-of-the-art frameworks like Spark, we process large-scale data in both batch and stream modes. The goal is to clean, enrich, and transform collected data into usable formats that support real-time insights and historical analysis. Processing workflows are optimized for speed, fault tolerance, and scalability.

We apply advanced analytics techniques, including statistical modeling, data mining, and ML, to extract actionable insights from the processed data. This step helps uncover patterns, predict trends, and support use cases such as customer segmentation, anomaly detection, and forecasting—driving measurable business value.

To make insights accessible and understandable, we build interactive dashboards, reports, and visual analytics tools. These visualizations are tailored to your users and use cases, enabling teams to monitor KPIs, explore trends, and share findings effectively across departments.

We deploy models and data workflows into production environments and set up automated monitoring systems. These include alerts, performance tracking, and feedback loops to continuously assess data quality, model accuracy, and system reliabilitys companies to gather big data froensuring long-term success and adaptability of your big data solution.

Big Data Technologies We Use

Data Warehouse and Distributed Storage

Databases

Data Streaming and Stream Processing

Machine Learning

Frequently Asked Questions About Big Data

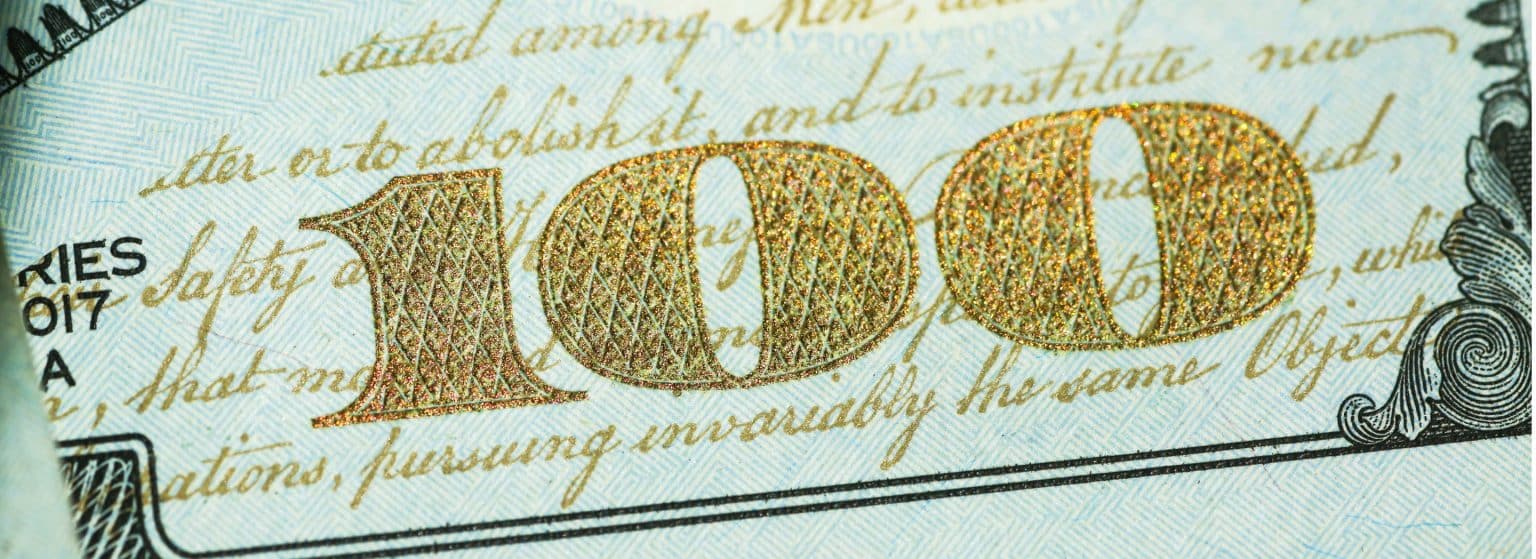

Earlier in the day, companies like Google, Yahoo, Facebook, and LinkedIn discovered the need of developing specific approaches for working with data at a bigger scale, which gave birth to particular programming models and toolsets. Nowadays, almost all of the middle- to big-sized businesses can benefit from applying Big Data methodologies.

The classical definition of Big Data is emphasized in the so-called 3 Vs of Big Data:

- Volume – when you have a big amount of data to store and/or process – on the Tera/Peta/..-byte scale.

- Velocity – when the speed of processing and sub-second latency from ingestion to serving matters.

- Variety – when you have a lot of metadata to manage and govern – imagine a relational database with thousands of tables of thousands of columns to catalog, and manage accesses to.

Data analytics includes a review of data needs and the designing of an appropriate data analytics workflow accordingly. Concrete approaches are defined depending on your business focus and current situation. Roughly speaking, there are 4 interconnected and codependent stages involved in Big Data processing: aggregation, analysis, processing, and distribution. For each of these steps, certain tools & techniques are applied. Big Data experts build infrastructures and models for data processing to cover the demands of specific businesses.

There are four types of data analytics: descriptive, diagnostic, predictive, and prescriptive.

- Descriptive analytics is the most common and basic type, and is used to identify and “describe” general trends and patterns. It is mostly applied for the analysis of the company’s operational performance, translating these insights into reports or other readable forms.

- Diagnostic analytics is a more advanced type, which comes to play when you want to investigate the reasons for certain trends and behaviors. Predictive analytics, based on the data insights received from the historical and present data, forecasts future trends and behaviours.

- Prescriptive analytics relies on descriptive and predictive analytics results and based on them the best future decision for your business can be suggested. It can efficiently help you to find the right solution to the problem.

They are interconnected, yet they are not equal in meaning. By its concept, Big Data designates all data types, characterized by volume, variety and velocity, which are extracted from different sources and require special systems and modeling techniques to process them efficiently. AI-powered analytics is, in turn, a set of scientific activities applied to process big data for specific business goals, which requires expertise in a number of fields, like mathematics, computer science, statistics, AI, etc. We can say that its concept has originated from Big Data.

Apache Spark is a powerful and robust cluster computing tool, which its users access to:

- Parallel data processing on multiple computers in clusters, meaning skyrocket delivery speed.

- Working with any type of data storage – from file systems and SQL databases to various real-time streams coming from different sources. You simply share the access to your data – and we can start analyzing it instantly.

- Spark's encapsulation of powerful artificial intelligence algorithms that run their ML module allows distributed data processing, and functions seamlessly with real-time operations.

If you have piles of data, our DSaaS team will prepare it for the analysis, run the algorithms and present their findings whenever you need them.

Explore key concepts, technologies, and use cases that show how big data and analytics.